Utilities

Introduce what utilities (CLIs) GenAI Studio provides and how to use them.

Introduction

GenAI Studio is an AI platform based on Ubuntu Linux. We provide several utilities in command line interface (CLI) to manage GenAI Studio with ease. This document gives you all the information you need to get familiar with them.

Prior to going ahead, you need to know that all utilities are populated inside bin folder under it's installation directory. In addition, when you encounter something like app-ps: command not found problem inside bin directory, just adding ./ so that shell can find the right one for you. For example, ./app-up.

Service Management Utilities

The first part of utilities to introduce are service management utilities. They are named with prefix app-. Most of them accept an extra argument, a specific service name, so that they shows information only for that service. The service names you can provide are listed below:

autoheal

dcgm-exporter

flowise

ftp

grafana

node-exporter

ollama

postgresql

prometheus

qdrant

server

autoheal, dcgm-exporter, flowise, ftp, grafana, node-exporter, and prometheus are available since v1.1.x release.

Take a special care that do not do any actions to them, except server service, unless you know what you are doing.

app-up

This utility starts all services up. If what you want is just to start a single service, give that service name as the argument. Examples are listed below.

./app-upstarts all services../app-upserverstarts GenAI Studio server as well as all it's dependent services.

app-down

This utility is the counterpart of app-up. It shuts all services down. Also, it can shut a single service down if you provide a specific service name as the argument.

app-logs

This utility provides an easy way to show log messages of all services. Normally, it's recommended to give a service name as the argument so that it shows logs only for that specific service. Furthermore, an extra option -f indicates the output will follow recent messages, while option -t shows timestamps that messages are logged.

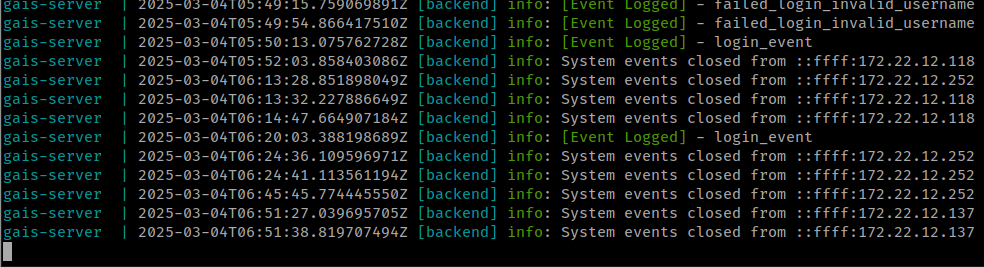

The image below was captured by issuing command ./app-logs server -ft.

app-ps

This utility shows users what services are running currently. We seldom provide a service name like other utilities though we can do so.

The next image shows the result of ./app-ps.

app-restart

This utility restarts services. It's not recommended to use this one since services may require whole reloading, use app-down and app-up in order instead.

app-version

This utility simply shows GenAI Studio version. This is the only utility that you don't need, and can't be provided, a service name as an extra argument.

app-version is available since v1.1.x release.

Model Management Utilities

No matter what you want to do on GenAI Studio platform, the model will always be the one of you are most interested in, or care about, with no doubt. There are two kinds of model that GenAI Studio handles: training models and inference models as well. The former one is not ready yet (it will be in the near future), while the latter one is just out there.

ollama-model

This utility can import/export a model into/from the Ollama server that GenAI Studio builtin, to serve functionality regarding chating, as well as embedding. There are 5 scenarios where you can use it to simplify your task.

ollama-model is available since v1.1.x release.

All the commands in the subsequent examples use phi4 as the target model name.

1. Import a model from online Ollma registry

If GenAI Studio can access internet, you can issue this format to import a specific model from online Ollama registry into GenAI Studio. Check the online page to get available models.

2. Import a model from a model file

Import a model from the given model file into GenAI Studio. The model file must be exported by ollama-model as well.

3. Export a model from Ollama server that GenAI Studio builtin

When you want to backup models from GenAI Studio, this format brings you there.

4. Export a model from Ollama server running on localhost

If the localhost install another Ollama instance, you can use this format to export any models from that Ollama instance. The expored model will be saved as a file called model file with .model as its suffix. Such files can be imported into another GenAI Studio to be used.

5. Export a model from a running Ollama container

Let's say that there is an Ollama container instance is running on your host. Using this format can export a model from that instance to be a model file. Remember to change the CONTAINER_NAME to yours.

Last updated